When working on my latest project, I wanted to be able to provide an easy web interface that can be used to upload images into OCI object storage on ZFSSA by choosing the file on my local file system.

In this blog post, I will go through the series of steps I used to create a page in my APEX application that allows a user to choose a local file on their PC, and upload that file (image in my case) to OCI object storage on ZFSSA.

Below are the series of steps I followed.

Configure ZFSSA as OCI object storage

First you need to configure your ZFSSA as OCI object storage. Below are a couple of links to get you started.

During this step you will

- Create a user on ZFSSA that will be be the owner of the object storage

- Add a share that is owned by the object storage user

- Enable OCI API mode "Read/Write" as the protocol for this share

- Under the HTTP service enable the service and enable OCI.

- Set the default path as the share.

- Add a public key for the object storage user under "Keys" within the OCI configuration.

Create a bucket in the OCI object storage on ZFSSA

Now you should have the oci cli installed, and the configuration file created, and we are ready for the command to create the bucket.

oci os bucket create --endpoint http:{ZFSSA name or IP address} --namespace-name {share name} --compartment-id {share name} --name {bucket name}

For my example below:

| Parameter | value |

|---|---|

| ZFSSA name or IP address | zfstest-adm-a.dbsubnet.bgrennvcn.oraclevcn.com |

| share name | objectstorage |

| bucket name | newobjects |

The command to create my bucket would is:

oci os bucket create --endpoint http://zfstest-adm-a.dbsubnet.bgrennvcn.oraclevcn.com --namespace-name objectstorage --compartment-id objectstorage --name newobjectsEnsure you have the authentication information for APEX

| Parameter in config file | value | |

|---|---|---|

| user | ocid1.user.oc1..{ZFS user} ==> ocid1.user.oc1..oracle | |

| fingerprint | {my fingerprint} ==> 88:bf:b8:95:c0:0a:8c:a7:ed:55:dd:14:4f:c4:1b:3e | |

| key_file | This file contains the private key, and we will use this in APEX | |

| region | This is always us-phoenix-1 and is | |

| namespace | share name ==> objectstorage | |

| compartment |

|

In APEX configure web credentials

- Name of credential

- Type is OCI

- user Id from above

- private key from above

- Tenancy ID is always ocid1.tenancy.oci1..nobody for ZFSSA

- Fingerprint that matches the public/private key

- URL for the ZFS

In apex create the upload region and file selector

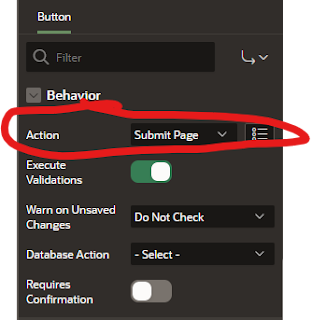

In apex create the Button to submit the file to be stored in object storage.

In apex add the upload process itself

| ITEM | VALUE |

|---|---|

| File Browse Page Item | ":" followed by the name of the file selector. Mine is ":FILE_NAME" |

| Object Storage URL | This is the whole URL including namespace and bucket name |

| Web Credentials | This is the name for the Web Credentials created for the workspace |

declare

l_request_url varchar(32000);

l_content_length number;

l_response clob;

upload_failed_exception exception;

l_request_object blob;

l_request_filename varchar2(500);

begin

select blob_content, filename into l_request_object, l_request_filename from apex_application_temp_files where name = :FILE_NAME;

l_request_url := 'http://zfstest-adm-a.dbsubnet.bgrennvcn.oraclevcn.com/n/objectstorage/b/newobjects/o/' || apex_util.url_encode(l_request_filename);

l_response := apex_web_service.make_rest_request(

p_url => l_request_url,

p_http_method => 'PUT',

p_body_blob => l_request_object,

p_credential_static_id => 'ZFSAPI'

);end;

In the APEX database ensure you grant access to the URL

BEGIN

DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE(

host => '*',

ace => xs$ace_type(privilege_list => xs$name_list('connect', 'resolve'),

principal_name => 'APEX_230200',

principal_type => xs_acl.ptype_db

)

);

END;

/